Wednesdays are the day for my weekly post/column, and while I was debating over what to share today, I went on Twitter1 and saw a link to this post regarding how to opt out of Meta (aka Facebook) using your information to train AI.

When I’m not feeling nervous about the upcoming election, the rising cost of everything, or my cat’s health, I’m feeling angsty and frustrated by AI. It is ubiquitous and insidious. While I do think it could be used for good, in so many ways it seems to be used for evil. And all my years in evangelical Christian schools taught me to stay away from evil.

I found this long, somewhat confusing article about Meta and its AI training, but the gist here is that Meta wants to take the public content you share on your profile and use it to train AI. Not the stuff you share with friends only, or your private messages, but the posts, photos, etc. you make public.

I also found Meta’s June 10 letter focusing on the European market, where there are laws that actively protect citizens and their online data. This letter is laughable because their approach is, “Well, we’re better than other companies because we’re actually telling you ahead of time we’re going to steal your data.” So if a bank robber walks in to a bank, and announces “This is a robbery,2” does that make them better than other robbers? Here are the takeaways from the letter written by Stefano Fratta, Global Engagement Director, Meta Privacy Policy:

We are following the example set by others, including Google and OpenAI, both of which have already used data from Europeans to train AI. Our approach is more transparent and offers easier controls than many of our industry counterparts already training their models on similar publicly available information.

Models are built by looking at people’s information to identify patterns, like understanding colloquial phrases or local references, not to identify a specific person or their information.

We’re not using people’s private messages with friends and family to train our AI systems, nor do we use content from accounts of Europeans under age 18.

We’re using content that people have chosen to make public to build our foundational AI model that we release openly.

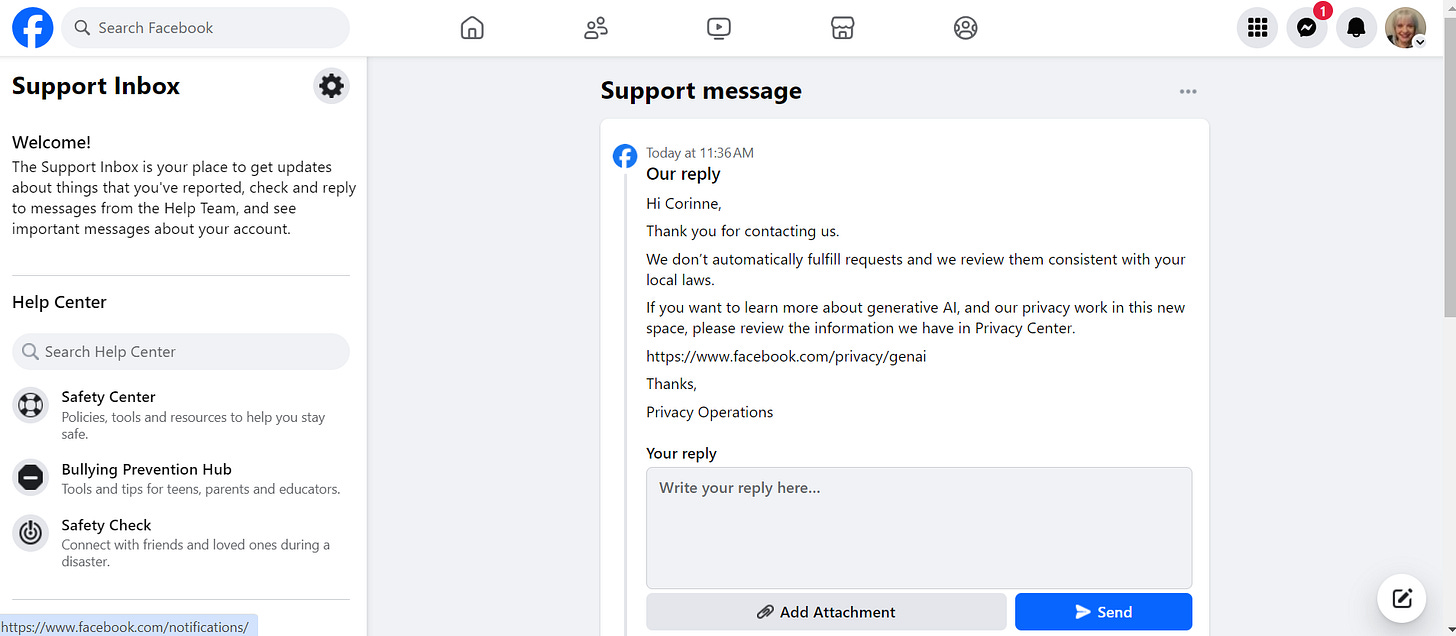

I went through the steps Deborah Copaken listed in her post. A minute or so after I submitted my request, I got this message back from Meta, which says to me they see all of this as performative and they won’t do shit about data privacy in the US until there are laws in place making them stop.

Scraping is fine when it involves bowls of cookie dough or cake batter. Scraping is also a slightly uncomfortable yet necessary part of diagnosing many diseases and disorders. But you come to scrape away at my personal data, I have a major problem.

I’m far from ready to completely remove my online presence. It’s been helpful many times over, and my skills with social media and website creation have gotten me many paid gigs over the last 15 years. But I hate what it’s all become, and I welcome any opportunity to set parameters and boundaries on how all these products, services, apps and programs use the information we share with it.

Not calling it X just because Apartheid Clyde says so.